"Construction" Note:

I vastly expanded this page as a starting point to make a video with several areas I see as overlapping parts of what I do. Dance photography/videography, Education Tech, Learning Concepts, Journalism (subject knowledge and "getting across," computer programming and web programming. These are all parts of my life and work history and every photograph I take incorporates all of them as well as history.

This file, as it is now is way too unwieldy for practical use or even to keep topics organized so my plan is to break this into a series of separate documents, with links in the panel to the left, and to interweave the existing panel links into a whole.

For now, wade through. I'll evolve this into an instructional script, or a set of such scripts. I also intend to setup a couple of self-published books on the web as well as a lesson set in multiple documents. With all the added material, this is rough for now. My apologies. Better is coming.

Cheers,

Mike Strong

MultiMedia in Practice

Subject versus Device

"Subject Knowledge" is the most important camera skill.

Everything flows from that.Don't get me wrong about the term multimedia. I use it casually, often enough. Still, there is a certain meaninglessness about the word "multimedia." I can't imagine, showing up to shoot a show or event, bringing "multimedia." I have never worked a concert where someone was looking for their multimedia rather than their stills or video or slides or mic levels and placement, sound checks and so forth.

At the same time, we've reached a point in media, in general, where we lose contact with content or meaning as we let fast cuts in movies and video dominate, they prevent our ability to register content. This is basically a montage technic gone stupid. Montage creates a feeling without letting you hold on to detail. This also promotes extended long takes (easier to do in digital) which exist for the glorification of the mechanism, pushing away thought and theme and concept. In radio, the aversion to the tiniest quantity of "dead air" leaves neither room nor time for the imagination which radio can promote as we fill in the non-aural senses.

The central mistake people make when looking at a photograph or movie is to assume that a camera created the picture, as if a brush painted a picture or a stove cooked a meal. Most photographers, even when shooting any kind of specialty, such as dance, do what I call shooting the camera, rather than shooting the subject. I've yet to see an ad for a photo workshop, regardless of photo topic, which is not concentrated on the equipment, rather than concentrating on subject knowledge.

Without the subject there is no point in the image. For years I've told people that were I to give a dance-photo workshop I would start with a week of tap lessons, beginning ballet and music-listening, then, only at the end, exercises in shooting to the music, in manual mode, using single-drive only. And no cameras until then. The dance lessons need to be first and need to be focused on. I've found over the years that I absolutely have to set my camera aside if I am to get anything from a dance lesson. Once my hand touches that camera, even a little, even once, my brain is lost to the camera. My lesson is lost. If this is a workshop for which you showed up and paid money, your money and your time are lost. Get the dance down first. You are not learning steps. Your body and senses are learning the feel of dance. When you do, at the end, pick up your camera again, your body memory will inform your camera usage.

In the same way, to me, speaking of "multimedia" puts the cart before the horse. While I've been shooting both stills and video for many years, and recording audio, and working with live shows and producing recorded shows, I never think of it as multimedia. I am always thinking of the subject first and then what I need to cover it. The methods needed to cover the subject determine the technical methods. I spend a good deal of time refreshing batteries or recharging batteries, clearing recording media, making sure all camera defaults haven't changed (they can), arranging which mics and cameras I will use and packing them into bags along with the tripods or light stands and any lights I need. I never, ever, think, "I'm getting my multimedia together."

The same when I tear down, load out and return home to download recording media, then synch and edit. These are just the means to store and edit the results from the show or other event. Plus that, I am always making some sort of change in the "kit" I have available to work with. Maybe I should just call it "the kit-keeper job."

Long ago I grew a bit weary of being excited with each new "thing" or method and lusting after each new device. Computers and software have repeatedly been sold as a sort of magic elixer to cure anything. Now I wait a bit to determine whether it is really a new product, just a new package, new paint on the old package or flat out snake oil? Sometimes the "new paint" is a better version and sometimes a worse version (such as Windows' changing their default image-viewing and video-viewing applications which are far slower and longer to boot than the old ones).

Many words can be used for the media mix which forms a single presentation, whether it is a standard stage mix or overwhelms the senses. Here is a quick list off the top of my head. I won't even try to pretend it is complete or definitive.

- Environmental Visual Jukebox (the first description of the event producing our term),

- Illustrated Music,

- Multimedia (our term),

- Multi-media (early spelling of our term),

- Music-Cum-Visuals,

- Discotheque,

- Psychedelic,

- Ambient Video (from the DJ's projections at a local social dance for New Years 2020),

- Massed Media,

- New Media, generally used to describe news media on the web in the late 90's to early 2000's, but largely a defunct term now,

- Rich Media,

- Multi-Platform (claim seen on a video documentary),

- Multipurpose and I'll just add in multi-task as a related concept (really, mostly, time-sharing)

- --- and, remember the term ---

- Audio-Visual (AV).

- this: "Director of Visual and Immersive Experiences." - title for a position at National Geographic

Or the newer ideas of combining live cameras, projections, and live stage as part of a single live performance where the players merge between the screen and the stage (as in my "Tour of the Bolender Center" for KC Ballet in 2011 - http://www.mikestrongphoto.com/CV_Galleries/VideoEmbed_BalletBall.htm).

Stage locations are left to right across the stage. In person our brains determine who is featured. In camera this just looks weak. The easiest way to think of shifting stage locations for camera is to fold the left-right alightment backward from center stage. Cameras need front to back locations for performers in order to produce an equivalent feel to a live-show.

On stage, locations for players are 90-degrees opposed to locations for camera (left to right for live events, front to back for camera framing). You can see this illustration above.

Go here for an expanded coverage about shooting for dance:

http://www.mikestrongphoto.com/CV_Galleries/PhotoCV_SubjectKnowedgeForDance.htm

Multimedia #1 - Overwhelming the Senses

Multimedia is a fuzzy term, first coined in 1966, although the elements of using multiple forms of presentation together have been with us in spectacle and performance throughout recorded history, even from the beginnings of our species, as suggested in the performance of a jungle shaman in "A Man Called Bee." In that film, an anthropoligist films a Yanomano shaman slapping his legs, buzzing his lips and in general giving a performance which was probably mesmerizing to his audience. Watching him I thought he would be great with a fuzz box and a set of lights.

The term "Multimedia" as first used, described a particular event with immersive audio-visual technology flooding the senses.

When I started into serious photography in the summer of 1967 (I'd shot pictures before but no concentration) the term "multimedia" was new by exactly one year. Although the word "multimedia" was coined for an event in the summer of 1966, the impetus for the event was a 1965 Christmas party in Bobb Goldsteinn's Greenwich Village studio. According to wikipedia, Goldsteinn appealed to multiple senses at the same time. He "... created an environmental visual jukebox that illustrated music by surrounding the spectator with manually synchronized light effects, slides, films, moving screens, and curtains of light under mirror balls that kept the room in spin."

In July of 1966 Goldsteinn coined the term "multimedia" to promote his resulting show, "LightWorks at L'Oursin," in Southhampton, Long Island, NY. The next month (August) Variety writer Richard Albarino wrote, "Brainchild of songscribe-comic Bob (‘Washington Square’) Goldstein, the ‘Lightworks’ is the latest multi-media music-cum-visuals to debut as discothèque fare." This was hardly the first time that combinations of stage effects for an event were used. It was the first time they were given the name "multimedia," a label which remains with us today. Later the work of political consultant David Sawyer was termed "multimedia." Sawyer's wife Iris was one of Godsteinn's producers. The term itself quickly merged into the culture and was used in various ways although in the last 30 years the usage has shifted.

The internet takes the term "multimedia" and uses it to describe "documents" and other files ("resources") on the internet, usually accessible via the web as multimedia when combining text, databases, images and video in various combinations. In the meantime theatrical shows seldom use the term. This announcement from my email helps illustrate why I have trouble using the term "multimedia" as if the term clearly defined itself:

Dreamflower Circus

Welcome to Dreamflower Circus where imagination meets reality and science. Immerse yourself in the experience as aerialist soar through the air, contortionists twist in nightmarish shapes,and dancers come in and out of your vision. With live interactive projections, live music, installation art, a virtual reality room, live art demos, and a chance to see what your brain on art looks like. Oh and there will be magic, how else do we bring dreams to life without a little magic.The "Dreamflower Circus" announcement included an illustration labeled "mixed media." This was not billed as a multimedia show, just a show with a mix of entertainment methods and entertainers performing under a theme title. Which of those show elements would you choose to handle if you are the multimedia person? In my experience that goes to the producer and director or directors to dole out responsibilities and put together the crew who will bring the show together, a themed variety show.

Multimedia #2 - Text, Audio and Visual Elements Combined in a Document

More simply "multimedia" simply refers to the use of various media in a single work, such as a show or web page with text, images, lights, audio and video on any of several "platforms." As generally used today, the media in multimedia are usually electronic or at least electronically created or controlled as well as constrained within the boundaries of the computer or phone or tablet and seldom immersive except for a small, growing and developing "virtual reality" segment. A few examples:

- Texts

- Audio: (in various forms and combinations, each also considered a medium: wax cylinders, lacquer or vinyl records, wire recorders, magnetic tape [reel to reel, cassette], wav files, mp3 files, live sound, sound tracks [sound on film], sound tracks with video, mono, stereo, 5.1, Dolby, etc.)

- Still Images (by themselves each considered a medium: photos, drawings, paintings, and so forth)

- Moving pictures:

- animations

- video

- movies

- Posters and banners

- Post cards

- Email, often with mutiple media attached or embedded

- Presentations:

- title slides

- PowerPoint

- stage shows

- Infographics (which I often find confusing, muddled and self-impressed (as another hyped "technology") rather than using well expressed text information)

- Interactive interfaces (mice, touchpads, touchscreens, voting buttons, etc.)

- Video connection apps for phone and computer (laptop, desktop)

- Live streaming of events from any device including smart phones

- Tele and video conferencing, organizing and messaging technologies which seem to sprout faster than dandelions such as:

- Apple FaceTime (iPhone & iPad only),

- Zoom,

- Microsoft Teams, (business and school only, other usage defaults to Skype)

- Skype, (Microsoft default for general connection. For school or business, use Teams)

- Google Duo,

- Google Meet, (formerly Google Hangouts - for members of Google's G Suite [formerly Google Docs] although GMail users can join meetings)

- TikTok,

- Snapchat,

- Dubsmash,

- Cisco WebEx,

- BT MeetMe (phone with passcode, has scheduling add-ins for MS Outlook),

- Facebook Messenger,

- WhatsApp Messenger,

- Houseparty,

- GetVokl,

- Discord,

- And others ...

- Virtual reality and immersive 360 environment

- Video combining film, stills, drawings, paintings, semi-animations of stills to embedded drawings or paintings with changing visual animation (as labeled for "Unladylike" from American Masters) and constantly changing, demi-montage technique of presentation and cuts with voice overs.

Media within Media

Even closed captions and subtitles, created as auxiliaries to movies and video, are their own media. They support better understanding of the scripted audio, for hearing viewers and to let deaf or hard-of-hearing viewers "see" the audio. In a similar vein are "title" tags and "alt" tags in web pages, offering information to non-sighted readers but also offer other information such as cutline IDs. Essentially another version of the movie. In writing closed captions I found the text-on-screen delivery creates another sense of the script and shifts what you look at. Alt tags and titles, in turn are used by screen readers which adds yet another form of media and another version of the page for a blind visitor. How that mixes with the body text shifts how the page content is revealed.

Media So Common We Forget it is Technology

Don't forget paper (or carved stone), long-proven media technologies which continue to function, for centuries, without batteries or mains. Paper still gives my brain the opportunity to reach out and grab the material. That is how the material gets to us, when our brains reach out to the source, not when a technology attempts to shove it at us. Imagine shoving rolled up paper into a hold in our heads or bashing our heads with the Rosetta stone. Information doesn't work that way. It can't be forced with technology. Or, as I like to say, a camera won't take the picture for you but the wrong kind of camera can get in your way.

Or more recently created media we seldom include as "multimedia" such as PowerPoint, which includes text, animations, video, still images, audio and is often projected to an audience as part of a presentation. It is one of several type of presentation software. It is the direct descendant of title slides, which used to be created using multi-exposure vertical cameras with enlarger heads and litho film exposing long rolls of color slide film, usually Ektachrome and used in a Carousel or other slide projector. Back in the 1970s we did title-slide work in the photo labs I was in. This was gradually supplanted with early digital slides using film printers to create Ektachrome slides or slide strips (1/2-frame strips of 35mm film which pulled through a projector one frame at a time - used a lot in classrooms).

Immersions

Virtual Reality in terms of either goggles or headsets is the latest iteration of VR for the last almost 40 years when game rooms had booths with screens for early electronic gamers. The latest versions are worlds beyond the 1980's but we thought it was great, even back then. At the same time the concept was used in flight simulators, including "Flight Simulator" software which included various airplanes you could fly on your Commodore-64, Amiga and other personal computers. This was greatly extended in commercial simulators used to train pilots, only with much more immersive (more screens outside the cockpit windows) experience complete with hydrolics simulating many (some) of the seat-of-the-pants feel when flying. Simulators have a long history going back to 1929 with the first Link Trainer prototype, created as a way to teach pilots how to fly on instruments. The first commercial model was sold in 1934 for $3,500 after the Army Air Corps lost 12 pilots flying mail under instrument flying conditions.

The theater space with rows of seats and aisles on the sides and middle is so common we seldom realize where it comes from or what the architecture dictates. We used to film the sohrabi festival each year at UMKC in an event room in the student union with flat spaces, a stage at one end, folding chairs for people to watch and food and other tables around the periphery. People would wander back and forth between seats and tables, talking and meeting as performers were on stage. When the university built a new student union they included a small lecture stage and theater combination with raked seats in a narrow space leading to a stage. That year's sohrabi was held in that theater space. Right away people were walking up and down the aisles as they were used to, talking to friends, but there were no food tables, and Nicole and I kept hearing calls to be quiet or sit down. We finally realized that the architecture of the space dictated much of the expected behavior in that space. The sohrabi was like a town-square festival and a formal theater space really didn't work well. The next year sohrabi was held in a large flat event room at the top of the union.

It also made us realize that even these formal settings were not originally used as we use them now, nor are the rules of decorum. Operas are still very long but then everyone knew the plot. More than that often they were they to see people they knew so they would slip in and out of the formal space. They had no radio or television or any other entertainment. So they were not there just to show up, sit for three hours, applaud, exit and go home. They were there for more social reasons.

And this. Not really Virtual Reality but I think you can see how it parallels. Then there is what I call "The Expanse," for which there is no camera in the world capable of creating the feeling of space and power in the landscape and sky as simply standing outside in the middle of it, on the prairie, letting it seep into your bones. Even in your car, driving west on I-70 you feel the power of sheer space. When I first traveled from Kansas City to Hays is when I was reminded of the power of sheer space. It was a replay of moving back to the midwest in 1976 after living in upstate New York with its tiny towns (Mayberry would be large in comparison) and with dots on the map being 5 or 6 miles instead of the 50 miles in the midwest. Back then I realized I had forgotten the physical sense of size. In going to Hays that first trip I realized that again I had gotten used to a closer space, now I again felt the draw of the openness.

Partnering With our Brains

Selecting by our Brains

Our brains select a little at a time, building it into a full-picture internal understanding. A little at a time is all the brain can take in at once. Think of it like building a ship in a bottle. All the materials are assembled, folded, put through the neck of the bottle, then expanded. A fully-expanded model would be broken if you tried to shove the model ship through the neck. Similarly, any attempt to push more information at us beyond what our brain's "bottleneck" can let through is simply thrown away (not selected) by our brains. If too much is shoved our way the brain will just shut it out and may close down for most input. Physical sensors get overwhelmed and shutdown, essentially taking cover.

For example music played too loud is mostly lost as the pressure waves slam into our audio sensors ("hair cells") like a wrecking ball, losing the delicate full range our ears are capable of hearing, even to the point of destroying those sensor hairs causing physical loss of hearing. Too much sound, of any kind, such as a confusion of sound, and our listening brain start shutting down, selecting only some items. For sound too loud, as with any wrecking ball, the destroyed hair cells for each person and event are never predictable wiping out random segments of the frequency spectrum. That means that merely turning up the volume on a hearing aid won't bring comprehension. Generally the high-volume audio "wrecking ball" destroys hearing a little at a time, sneaking in unnoticed.

Matching to our Brains

Again, in terms of getting it backward. For decades film and then video students were taught that our perception of motion in a motion picture was because of the image sequences and "persistence of vision." That is because for decades we asked the wrong question. We should have simply asked how we perceive motion, not how movies created a sense of motion. Cart before horse. The technical device, again, can't force the brain to do something it isn't designed to do. We perceive motion in film and video when the frame rate matches the "sampling" mechanism in the brain. The images below help to illustrate this.

See a fuller treatment here (this is the "Sense of Motion" entry in the top left menu panel):

http://www.mikestrongphoto.com/CV_Galleries/LessonExamples/MultiMedia/ApparentMotion.htmHere Eric Sobbe is shown in studio rehearsal in 2010 for the Chinese part in The Nutcracker for the American Youth Ballet, as a professional dancer working with the kids. I shot Eric at the top of each of four sets of two leaps. In each set of two leaps he tucks his legs underneath, then he hits the floor and leaps again, this time with a split jump. There are four sets of these, so eight in all. For this example animation I used one of the sets, one tuck and one split. Then I put them together in an animated GIF and adjusted times.

At no point does Eric move from the split to the tuck directly or the tuck to the split directly. He always lands after each and then jumps again. So there are no shots showing anything moving between tuck and split, nor could there be. However, in looking at the GIF, with only these two images, Eric appears to float in the air moving from tuck to split and back again and even seems to have intermediate positions (mostly perceived in a larger version). Of course there are none in the original source images. And, because there are no images of any tweened positions there can't be any kind of "persistence of vision" (a theory proved all the way back in 1911 by Max Wertheimer - which means film school have been teaching this all wrong for decades - what I call "persistence of error").

The point of this is to reinforce the concept that anything we do with technology needs to fit in with how the brain works if we want that technology which transfers information. The effect above doesn't happen if you get the timing wrong. Most applications of "multimedia" simply shove technology out the door, into our faces, with little if any consideration to whether any message will be absorbed or any effects will be fully appreciated. As a result a lot of hard work is just thrown away.

The Sacred 24 - The Real Origin of 24 fps

What I am about to say is true. It is also heresy in almost any "cinema" discussions and will generate flaming online. Here is an example of putting a technical feature as the defined specification for a particular kind of experience. It is based not on the actual history but on an attempt to rationalize current practices as the result of declared near-sacred decisions in the past. I assure you there is a history and it doesn't match any claims for esthetics which go into believing that 24 frames per second is the only biblicly superior frame rate. That is the entire problem with elevating 24 frames per second as the standard which defines film, and especially video, as "cinema." Time and again we are given choices of just video or shooting in "cinema" and usually the only difference is in frames per second. The real story is a typical type of back-of-the-envelope practical engineering decision.

In 1927 the competition was to be first with a commercial sound-film technology. Warner was developing Vitaphone, film synched with records for sound. Fox was developing Movietone, sound on film, film printed with an optical sound track on the side. At the time there was only one commercial film gage, 35mm and one frame ratio, 4 to 3. No one counted film in frames, only in feet per minute. In 1927 Stanley Watkins was chief engineer with Western Electric and working with Warners. He got together with Warner's chief projectionist to go over standard film speeds already in use. Silent film was shot at about 60 feet per minute though it was projected at varous speeds from 60 fpm to 90 or 100+ feet per minute, because there was no sound to distort. The better houses ran films at the original speed while lesser houses ran it faster in order to get more showings per day. So Watkins decided to compromise, at the round number of 90 feet per minute.

At that rate the number of frames per second came to 24 fps, but no one needed to care about frames per second until working at other film widths, such as 16mm or 8mm. Watkins, in 1961, stated that if they had really done it right they might have researched for 6 months or so and come up with a better rate. Fox was doing its research and was considering 85 feet per minute. If Fox had won that race we would have been running cinema at 22-2/3 frames per second instead of 24 fps (remember, they were thinking in feet, not in frames).

By 1931 the sound-on-film system replaced the sound-on-disk system because it was so much handier to fix when film broke even if the audio was not quite as good. With sound on disk, if the film broke you had to replace it with the same number of blank frames otherwise you would wind up out of synch. With the sound track on film, the most a break could cost you would be a seconds worth of out-of-synch sound. By 1931 the Fox system was designed to run at the same 90 feet per minute rate in order to fit in with the existing system established by Warner. So in essence pure chance and a casual compromise set the speed of film travel and easier-handling brought in the sound track on film method.

One of the arguments I often heard, and, sorry to say, repeated in good faith, was that 24 fps was a cost compromise between bad sound at 16 fps (silent shooting speed) and something faster which would mean more film so more cost. But what I, and seemingly everyone else, didn't pay attention to was that sound quality for the Warner system was independent of the film speed because the sound came from a record which played at 33 1/3 rpm, another standard spec [on this page below], though not until 1948. So, using the audio-on-disk system, the film's frame rate in terms of frames per second didn't affect sound quality in any way.

There was never a grand aesthetic vision determining the frame rates. The original decision wasn't even about frames per second but about feet per minute. Note that we don't talk about "frameage" but we do talk about "footage" as a measure of shooting times, even for digital files which are not measured in feet. The "esthetics" of 24 frames per second "cinema" was an artifact of a practical engineering decision in the moment. We have far better cameras today but insist on remaining in 1927. The people in 1927 were not trying to stay in that year. If they had more advance tech at the time they would have set it up.

For that matter, if the status of film in 1927 was the absolute gold aesthetic standard then not only the frames-per-second rate but the film proportions (aspect ratio, width to height) of 4:3, established in 1892 by Thomas Edison, should have been held to. In a sense, it was. Television had a 4:3 format, mainly because that was what people expected, again a practicle decision. Once television was established and expanding in the early 1950's, movie people saw a potential rival and invented "widescreen" for projection in theaters. Like good practical engineers they figured out a way to use the existing equipment with a small modification, the lens. They shot with "anamorphic" lenses which took in a wide horizontal angle and compressed it to fit in a standard 4:3 frame. Then, on projection, they used an anamorphic projection lens to spread the 4:3 to widescreen, with various ratios for a while but eventually standardized at 2.35:1. An aspect ratio of 1.85:1 was introduced in 1953. This was almost the same as the current 16:9 used in today's television.

In a similar practical way the 25 frames per second television rate in Europe and the 30 frames per second rate in the US were decided for the most practical reason. They needed to have a standard clock to trigger frames on television cameras. The line current (50 hertz in Europe and 60 hertz in America) provided a great timer to synchronize video frames. And, not for esthetic reasons exactly, but because of limitations of image retention on a cathode ray tube (the phospors died out too soon), each frame was divided into two fields, overlapping each other, or in other words 50 fields in Europe and 60 fields in America per second.

Only in the last couple of decades, as video cameras and sensors exceed what film can do, has the "cinema" term been used to differentiate "mere" video from the more snobbish "cinema," usually just video at 24 frames per second. Yet the cameras are more and more interchangeable and the highest level video (as cinema) cameras, always shooting at 24 frames per second (of course) are used like social classes to claim top rank even though many of the lowest ranking video cameras are not only better than many or most of the old film cameras but are even used for cinema, such as three smart phones used for the documentary "For Sama" about a refugee couple and their baby, as they "filmed" on cameras their own flight from death threats. It is cinematic, regardless of frame rate or camera, won 59 international awards and had 40 other nominations at this writing (https://www.imdb.com/title/tt9617456/awards).

Then My Eyes Crossed, Several Times Over

Not long after writing this I saw an email from Videomaker magazine. They were featuring an article titled "How to Make a Movie" by Sean Berry. I've done documentaries and a movie and more than enough productions, hundreds, at least, of long and medium works, but thought I would look at the article anyway. To be honest the magazine hasn't had much for me for a couple decades. In reading, I saw a section of the article which repeated the same trope I've just been talking about. Just to reinforce the concept of persistence of error, here is a cut and paste from the article

(https://www.videomaker.com/how-to/shooting/how-to-make-a-movie-everything-you-need-to-know/):How to make a movie: production

Camera settings

Typically, when shooting a film, you should aim for shooting at 24 frames per second. Why? Almost every film is shot at 24 fps, so your film will automatically look more cinematic. However, if you shoot a bit higher, that is still okay. Most video cameras are automatically set to 30 fps, but you can likely change the settings to 24 fps. Additionally, if you want to sprinkle in some slow-motion shots in your film, shoot at higher frame rates like 60 fps and 120 fps and later slow it down to 24 fps in post. Slow-motion almost always makes footage more cinematic when. Just don’t overdo it.

Again, 24 is some mysterious and sacred number to these promoters of 24. In this case the picture of the author looks pretty young but I also hear this from people my age. I have to repeat, there was NEVER any aesthetic-look consideration or research in the decision to use the film speed which is now seen as 24 frames per second, and was never about frames but about footage (90 feet per minute) and that was to match the projection rates being used in 1927 in cinema houses for silent-film showings.

It is a stupid place (there I've said the "s" word) to get hung up. Picture quality in terms of resolution, tonal range, color balance, framing are the tools that go into an image whether film, video, digital stills, oil paint, watercolor, charcoal, frescos or a tapestry. And giving the viewer time to register what is happening and who someone is are crucial to establishing a connection with the work whether it is a movie, a radio essay, or anything else in which you are telling a story.

If 24 frames per second makes something cinematic then all the silent movies shot at 60 feet per minute(which works out to 16 frames per second) were what? Just waiting for cinema to come along some day? Nor does it take massive cameras.

The multiple award-winning "For Sama" is (copy and paste from the film's website) "... a feature documentary ... of a 26-year old female Syrian filmmaker, Waad al-Kateab, who filmed her life in rebel-held" Syria "as she falls in love, gets married and gives birth to Sama." Her camera, a DSLR, shooting 30 fps video, is the same tool available to almost anyone.

And "Midnight Traveler" was shot on three Samsung smart phones, again at 30 fps. This is about Afghan film-maker Hassan Fazili and his family running from the Taliban who had a death order out for him. Going to Tajikistan and Hungary and, after the film, to Germany, and still uncertain status. Editing and post production in Premiere give the "film" its look.

For that matter a lot of film, mostly for television, was shot at 30 frames per second. Two movies by Mike Todd, first "Oklahoma" and then "Around the World in 80 Days" were shot in two frame rates, 24 and 30. In "Oklahoma" each scene was shot twice, once with 24 and once with 30 fps. The next year with Around the World in 80 Days he shot each scene once, with both cameras strapped together, running at the same time. Mike Todd wanted a steadier picture and better image resolution (note, those are both aesthetic reasons). Mike Todd did get steadier images and better resolution but he didn't get theaters to change their equipment.

Cheers and Seinfeld are two shows shot on film at 30 fps that come quickly to mind. I haven't been able to find a full list of shows. If you are shooting film for television, which is broadcast at 30 fps, why would you shoot at a different rate from the target rate? It you shoot at 24 frames per second you will have to either repeat every 4th frame or run a pull down scheme (such as 3:2 pulldown) to borrow fields from one frame and mix with the adjacent frame to flesh out the number of frames needed. Pull down methods have ghosting artifacts. You could repeat a frame but then you have to stop the film for that repeated frame and also maintain an very loose sound loop in order to keep the sound running at a steady rate over the sound head for the audio transfer. The best way, which was used on many television shows, is to shoot at the end-usage target frame rate of 30 fps.

Frames created from 24 fps video using pull down to generate another frame for playback at 30 fps. A field from one frame is joined with a field from the next frame, producing the "ghosting" artifact. Single frames of the same video shown at the 24 fps rate it was shot at. This is also what you see if, out of each four frames, you get 5 frames by copying one of those frames twice. If your editor allows it, you can take a 24 fps file and duplicate every 4th frame for playback at 30 fps. You will have a hard time noticing the expected jerkiness. US television runs at 30 fps (actually 29.97 fps) so why shoot at a different frame rate?

Here is my illustration of two "pulldown" schemas, 3:2 and 6:4. Remember, every frame of video is comprised of two fields.

In a 3:2 pulldown (shown at left) every 4 frames shot at 24 fps are converted to 5 frames at 30 fps by grafting fields.

30-fps frame 1 gets all of 24-fps frame 1. (with 1 frame used next)

30-fps frame 2 gets field 3 from 24-fps frame 1and field 4 from 24-fps frame 2.

30-fps frame 3 gets field 5 from 24-fps frame 2 and field 6 from 24-fps frame 3.

30-fps frame 4 gets all of 24-fps frame 3. (with one frame used previously)

30-fps frame 5 gets all of 24-fps frame 4.

The "ghosting" effect, which you see above in the top left two pictures, shows the mix of fields from two adjacent frames.

To derive 30 fps without pulldown in Vegas Pro, my preferred editor since 1999, you need to right click on the clip ("event" in Vegas terminology) and choose "Properties" from the popup menu. The in the "Video Event" tab of the dialog choose "Disable resample". Leave both project and resample rates at 1.000.

This way, from every 4 frames shot at 24 fps, one frame is copied twice, giving you 5 frames and eliminating the ghosting artifact.

Even though you might expect to see judder, you will be hard pressed to detect any judder from this method.

Storage, Archiving and Access

And first in the line of how to store and deliver all this material are the means to store, distribute and archive the recorded results and the recordings which are part of a presentation or just a record. The software and the hardware. I haven't know a time when the storage media or hardware and most file formats have not changed. In terms of the machines, many old devices can no longer operate, or cannot read the old files for various reasons. Even when drive belts can be replaced often capstans are flattened and can't be fixed or replaced. And when the entire machine is workable often the media are not readable, either through "digital rot" or lack of correctable check sums or because the recording layer is flaking off the substrate or the file format is not recognized by existed software.

Sometimes the machine works but there is nothing remaining which will read the files or play the film (except for increasingly hard to find specialists with still-running old equipment). Often, especially in video, the worst formats are professional formats, which are always changing in "bleeding edge" fashion. The amateur or prosumer formats seem to last longest and have the best chance of being read by machines and by software over time. Video/movie files stored for archive need to be visited every few years to 1) make sure the hard drives still function [they have limited lives, but you are usually not told that], 2) to copy the old files to new disks to keep the storage media current as well as provide duplication/redundant safety copies and 3) copy and convert a file format which may be going extinct to the newest format expected to last a while.

A quick, off-the-cuff, longevity assessment ( where longevity == reliability )

Gone, hard to find, difficult or impossible to read: Laserdiscs (the "wave of the future"), 17-inch LPs (for radio stations), U-Matic, 8-mm film, Sony Beta, daguerretypes, calotypes, Hillotypes, collodion, film, 8-mm video tape, Ampex 1-inch video tape (first video tape), data tapes, punch tape, punch cards,

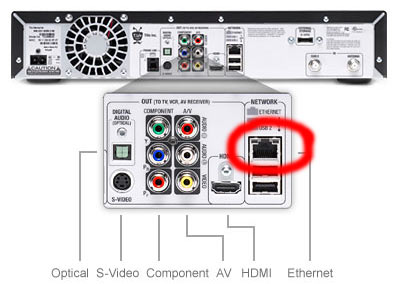

Still here, coming to an end, all but gone: mp3 devices, DVD, CD, Bluray, (players and burners are being reduced, Bluray carried more but is last wave of optical disks), VHS (video home system), streaming from individual websites (especially old formats like RealMedia), direct-to-dvd recorders for VHS-DVD and on-air-recording, RGB/DVI cables or ports.

Moving in: Streaming services which own the streaming and which take a cut and or ownership and are starting to own the means of production (the tools, programs to edit and render) some in "the Cloud" and some on your "own" machine as well as becoming production studios in the new mold of the old movie companies and networks (such as Amazon, Netflix, HULU, YouTube, Crackle, Sling), HDMI cables (because they have HDCP [digital copyright connector] unlike the DVI cables which have the same wires but no HDCP provision).

Also, live streaming services and apps are showing up everywhere from Facebook Live, Twitch, YouTube, Periscope, Vimeo Livestream, Ustream, Meerkat, OBS, Raptr, vMix, XSplit and others. Also hardware from BlackMagic and El Gato.

Part of archiving media requires extensive database cataloging. I have yet to meet a good catalog commercially. They almost all have a single-machine point of view or a "cloud" service point of view. Some years ago I wrote my own cataloging program and use it to catalog all working and new archive disks. My cataloging database keeps a record of the files, paths, descriptions and the disks those files are on. I label all my disks with a name for the disk and a physical label at the end of the disk. The working disks cycle through as a working archive in shelf storage. Some clients' shows are also archived on (copied to) disks with files specifically for each client.

Disclaimer

If I haven't made it clear by now I should do a personal disclaimer to let you know where I am coming from. I've always been an early adapter, often on the "bleeding edge." After enough "bleeding" I tend to wait a little longer deciding what to adapt and whether I do. I'm also from a time when small and efficient code was a default requirement. I still want to see that today.

In most cases we do not need "smart technologies" but smart applications of old technologies. To get information across, any method needs to work with the brain's ability to absorb information. Any "smart" technology needs constant updating and replacement at considerable expense in both equipment, personnel and time to implement.

I was a science-oriented kid. A chemistry kit, A.C. Gilbert Erector Set, microscope, 3-inch reflector telescope, electronic projects, model airplanes and ships and shelves of books formed my world.

I've always been a technologist since I was a small boy, with microscopes, chemistry kits, erector sets, telescopes, and building model airplanes, ships and crystal radios from scratch. I was in a tech position in the Air Force (geodetics) and I've been a programmer since writing my first program in Fortan IV in the fall of 1966 on an IBM Systems 360.

My Uncle Bud (high school education only) was the first in his family to bring mechanization to the farm, changing from mules to tractors, from wood stove and lanterns to electricity (which was quite a cooking adjustment for my Aunt Lill) though their son and daughter graduated from ag school and are on the farm. I still remember their old party-line crank phone on the wall of their kitchen.

My maternal grandfather was one of the first dentists in the country to use dental x-rays and in 1947 was a recipient of the Pierre Fouchard award for work contributing to facial reconstruction for war injuries. His father, whose parents brought him over from Ireland in 1848 as an infant in the last large wave, went armed against death threats for his part in starting a school for blacks in Maysville, Kentucky, somewhere around 1900, I never knew the details. Both my mother and her mother were school teachers. My Uncle Frank Devine was a developer in the 1950's of what was called "Programmed Learning." It was part of his PhD in ed psych.

Function and Usability Determine Technology

Blending before it was called "Blended"

Some years ago I started a new course, with Nicole English, for the UMKC conservatory's dance division to fulfill their technology academic requirement. This course was to teach 3D animation to dancers by having them use a program called "Danceforms" to "notate" their dance on computer, producing a video showing a piece they choreographed. It was a 3-hour face-to-face class on Friday afternoons in a computer lab. But often the dancers, who were in a performance program, were in rehearsals on Friday afternoons (their courses of study were all geared toward performance meaning they were required to be in a lot of shows).

The level of tech usage ranged widely, but even the dancer who declared, "Computers just don't like me" did well. Her strength was her understanding of dance. As features of the program were introduced and demostrated for her, she could apply those features to what she already knew. All the dancers could be seen going through various motions such as hand movements, comparing those motions to the palette to apply to key frames. In that way they could understand the program to produce an animation of their own choreography.

So, to accomodate their various performance schedules, we set up a course which

1) supported all face-to-face resource needs and calendar needs,

2) could be used as an online reference and for those installing the animation program on their own computers could be online,

3) added lab times elsewhere in the week where they could get help from us and

4) gave them space to work with and assist each other (dancers are very inter-supportive),

5) provide a library various media files, video and music, for use with animations, for anyone needing them.It wasn't until a few years later that I began hearing the term "blended" classroom. For me, what we did was just designing the class to reach the students where they were. Even today I don't think of it as a "blended" class, just a class which met needs where they were. To me, the "blended" category seems to limit thinking about course design at the same time that it gives us a new category to work with. In writing the course I just did what was needed to match the students' needs and availablities and to get across the lesson projects.

Here is a URL to an archive of the "Dance Tech" animation course on my resume site.

I've modified the course to work without needing a server, substituting some javascript (my original code), so that I can use it on a USB or CD/DVD data disk as a resume handout.

http://www.mikestrongphoto.com/CV_Galleries/LessonExamples/Dance%20Tech%20Animation/Default.htmEd Tech, a personal history

Ed tech, as it is now called, started for me some 60+ years ago when my uncle, Frank Devine, was getting his ed-psych post-grad degree in the 1950's. He was one of the early developers of "programmed learning." He was good at it. "Programmed learning" done well is effective but far more difficult than it looks. Just the same, within a few years commercial publishers jumped on the "programmed learning" label, turning out their old material with standard chapters and questions, no different than their existing text books, but with the new label (new wine skins, old wine, so to speak). "Programmed learning" was a publisher's buzzword for several years. Before long the reputation of programmed learning was all but destroyed. The buzzword itself faded into the background.

I've watched a parallel development with web-tech learning software. I was an early coder starting in 1999 creating code for a new fully online degree program (BIT - Bachelor of Information Technology). I still have a learning framework developed in javascript and based on my uncle's frames. It still works though I've had no occassion to deploy it in a long time. Others were developing learning software. There was a lot of hype and there were a lot of claims. There remain a lot of claims, usually not backed up with studies. Just rich with the same smell of snake oil that I've seen in software as long as I've been programming.

I've also see a lot of learning "platforms" such as those in BlackBoard which are today's version of those commercial publishers in the 1960's who jumped on programmed learning as a sales buzzword. BlackBoard is really just another server system with nothing so special in terms of learning software and the quiz system is nothing special, equivalent to those commercial publishers in the 1960's and their end-of-chapter quizzes. So far they are still selling like gangbusters and they are not cheap.

Rolling Your Own vs Packaged-Ed Programs - i.e. local vs outside

My biases, up front, are local. I have a very strong bias for using locally-produced products or services. (1) Partly that is because I grew up in a small business household, a glass service. (2) Partly because if we don't support each other face to face then how do we expect our neighbors to support us? (3) And, partly because in my experience, locally produced goods and services are targeted better for local needs just because we generally know our own needs better than anyone from outside. And, the last, in a seeming paradox, is because we are also part of a large community of knowledge exchange which is part of traveling and living in larger cities. The population of New York City, for example, is 37% foreign born. Similar for other large world cities. You can add a fourth (4) the same local knowledge allows quick and close maintenance, from a "hand-on-the-plow" feeling.

And that 37% foreign born clearly does not count all the people all of us know who come from small towns and go to NYC either permanently or who return after a few years or even decades. That indicates that the number of people in NYC born and bred there is probably a minority. This place becomes a rich mixing place where information and practices are exchanged as a matter of living and working together. In the meantime people have always had some sort of interchange with distant locations. In the last 30 years, with the internet and the World Wide Web (the linking system using the internet) we have all become a massive global city rubbing elbows with each other more closely than ever. The HTML code, by itself is instantly available to any coder who right-clicks on a browser window to get a pop up menu with the "View Source Code" option. Indeed, we often enough, can work with each other across vast physical distances, distances reduced to the reach of our arms to a keyboard and mouse.

Regarding the first two points, even my cameras, which can't be produced locally, nonetheless can be purchased either online or locally. I paid an extra $300.30 for my Nikon D850 body, in Kansas sales tax, and waited for weeks for the body to come in, because I wanted to support my local store, the only real camera store left in the Kansas City metro area, Overland Photo. When I travel between Kansas City, Missouri and Hays, Kansas I try to see whether I can make Topeka in time to get to Wolfe's Camera at 7th and Kansas Ave. And, when I really can't get something locally, either when I go in to the store or ordering through them, I will go online, usually New York City for B&H or Adorama.

On the third point, in programming, I found that the programmer who works the job for which the program is written, does a much better job in making a program act like a real extension of the worker's needs. The interface, depending on the skills of the programmer, might not always be as slick as the outsourced program, but it is often a better fit for the local job needs. Remember that programmers are in the front lines of people working directly across the globe with each other, no matter how tiny or how massive their physical location. Still, there is always something to be said for true face-to-face work.

By going outside, an organization is often passing up highly skilled and capable resources close by. The irony is that the outside programmers also are local - to the place they live. And programming something to a set of descriptions based on meetings, and project papers and notes and questions is not really enough for fine tuning. There is too much distance, small as it seems, between the programmer and the user of the program. The two need to work together, and ideally, the programmer should 1) also do the work of the user and 2) oversee and watch the user to determine whether the program is really understood by the user and whether the programmer really understands the user. That's a one-on-one kind of task. It has to be done in person, at "the desk." Meeting in board rooms, even next door to "the desks" is a wee bit distant.

In 1983 as a meeting coordinator programming PC's for a management office I needed to develop a mailing list of thousands using temporary workers. I found out very quickly that my interface needed adjustments as I watched the data entry temps type in addresses and other information for our database. Sometimes the operator wouldn't see items on the screen, would misunderstand prompts or might have fields which didn't fit the information and so invented, on the fly, ways of shoehorning the information into fields as they were. In each case, I had two choices, more training or a better program interface. In almost all cases I realized the responsibility and best answer was for me to change the program to fit the needs and understandings of the operator. I wound up with a much more useful program that way.

Later, in the early 2000's UMKC changed its system software for classes and enrollment from UMKC programmers to PeopleSoft. I remember meetings in 1999 before PeopleSoft was purchased in which I argued for retaining the local programmers (not me - I was working classroom software development - and, side note, didn't care for BlackBoard then, or now). The change to PeopleSoft was made regardless, millions were spent and we never, ever got back the simple utililty of much of the home grown software we had been using. Further, the talent migrated elsewhere.

A bit later I was hired to oversee new web sales software at American Crane and to build a web interface for the company leading to the new software. They had hired a large firm out of Dallas to make a fully new version of the sales software that my boss had originally written. The firm sold to wholesalers only and instead of the typical shopping cart interface a row and column display, like a database's spreadsheet view, was sent, with hundreds, or even a few thousand lines (records) at a time. It looked a bit homespun but it worked well. Even so, the company wanted to update the site and the best conventional wisdom, as it often does, said to hire outside experts because this is all the outside experts did. It certainly seemed like the right decision and is a common choice. It also cost a good deal.

Once done, the outsourced software worked nicely on local machines in demos in the office but when it went live we had page-size problems. Many of our customers were overseas in South Africa and in Bengladesh and so forth. They had much slower lines and the new software created HTML pages with full tags. It was a huge page load each time and the customers said they couldn't use our site to buy our parts because the pages kept timing out for them. So, I did an interim fix by reworking the scripting software and instead of creating a fully-formed HTML page where the internal structure added a ton of file size, I sent the page information in a delimited data form, very compressed, and then expanded it to full HTML on the client side using javascript.

The only catch was how much memory headroom each client had on their particular machine in their particular web browser. Nonetheless, this solved the problem of long load times and made those remote locations snap again. (I should note, they sold third-party Caterpillar parts to contractors across the globe. Some locations were very remote.

The first thing I should note (this is important) is that no programmer was trying to do a shoddy job. There should be learning, not blame. The outside programmers worked hard and with dedication. The problems were never from lack of effort but from lack of personal "user" experience. They weren't using it themselves. They were working to specifications from descriptions and questions, generally at some distance from actual production (such as meetings, phone calls, faxes, sometimes in our office and sometimes with us traveling to Dallas). You can only get so close to the goal that way. At some point you have to be almost in the same chair at the same desk at the same time.

In the spirit of number 4 above, I'm also a proponent of putting together web sites that can be maintained and updated locally, meaning the office or outfit the site represents should have the ability to make their own modifications. I don't want someone dependent on me to change their site for them. A site doesn't have to be fancy or clever. No one comes to a website to be impressed by your cleverness. People come to a website for information. The clearer and simpler the information is to access the better and more effective. The site is providing an information service, not a showplace for a web designer's theatrics. Nor should a website give a trendy and fuzzy impression for your organization. That doesn't mean it can't look good. Local talent is not to be underestimated.

One more example of local expertise. A personal story from the Air Force. It is one of those city slicker gets out-slicked by the hicks stories. One of the many teams I was on the Air Force was for a job in Greenbank, West Virginia at the National Radio Astronomy Observatory (Green Bank Observatory). Probably 1970 or 19'71. I didn't observe this directly but one of the other team members related it to me. One of our team, known for boasting had gone into the nearby tiny town to shoot pool. He figured he could take them because he was from New York.

We'll call him Gary. The guy who told me the story was the one who spotted Gary coming in the front entrance of the observatory very early that morning, shorn of a few personal items. Apparently Gary had bet the locals the shirt off his back, and his socks. He returned wearing a jacket, pants and shoes. For the rest of our job there Gary stayed at the observatory when the guys headed in to town. They were asked when they were going to bring the fish back. The locals, of course, knew every single inch of surface and every break point on their pool tables. Local expertise.

Rolling Your Own Ed Tech

This is not as scary as it sounds. Chances are you are already doing a version of this. The ed-tech software we see can have bells and whistles but those sometimes change with each update. In essence, the promise of artificial intelligence interfaces which will allow teachers to replicate themselves as they place their courses online is pretty limited. That's me being kind. For the most part, teachers are placing their material on a web server using the content management system from the tech supplier when they could be doing the same using Dreamweaver on any web server. Ed-tech interfaces do allow teachers to construct quizzes but not the still-promised AI. Most is pretty basic. As I stated above, most of this reminds me of what I recall happening to "programmed learning" in the 1960's. The delicate structuring in programmed learning was not implemented when publishers began producing their "programmed learning" books and instead wound up being the same old chapters followed by a list of questions. It may have looked like an easy formula but it wasn't. Real programmed learning was costly, exacting and time consuming to produce.

Elsewhere on the page I've noted my own objections to using CMS's (content managment systems) rather than typing pages in Dreamweaver. The original idea of CMS's was that web coding was too hard to expect large numbers of people to use, so CMS's are systems which could be edited on the web, directly, by anyone. The problem for me with this argument is a deliberate blind spot. While Dreamweaver is an HTML editor, it is a WYSIWYG HTML editor (what you see is what you get - a term not much used anymore as everything is WYSIWYG, pronounced WIZZ-ee-wig). That conveniently leaves off two major factors: 1) CMS systems have their own learning curves, which are at least as steep, or steeper, than Dreamweaver's, subject to updates changing the rules, and a user-experience awkwardness in responsiveness times. 2) If you can operate a word processor, such as Word, then should be a slam dunk. Dreamweaver is simpler than Word, with fewer options and convolutions and also fewer operating changes than the usual CMS. You can use it almost like a word processor. The only real learning curve comes with understanding where your files are, source and web copies, and how and when to move your document from your own desktop to the web location.

Often, because I couldn't get what I wanted in Blackboard, including use of javascript to create my own in-page review quizzes, CSS style sheet(s) and my own menus, I would write my pages in Dreamweaver and drop them into Blackboard in the resource files and then link to those resource files from the CMS front end of Blackboard. I will have to admit, that as an old coder I do chafe at the limits I find in Blackboard's controlled structure which I find limiting. Actually I find it a bit insulting because I don't see anything Blackboard pages have that I can't already do in Dreamweaver far more fluidly. Just changing fonts and creating attributes for heds and body text is like going backward, to the very first browser code, before stylesheets, with a much more awkward interface. I remember the first time I really looked at the code created in Blackboard to control style items (fonts, size, bold, etc) and realized how much extra and redundant code was generated compared to the compact and elegant way of adding styles with CSS.

Once you have teachers writing their pages in Dreamweaver instead of Blackboard you also have pages in your own file system (your own control) rather than existing purely in some "cloud." You also now have the task of working the database which directs enrolled students to your pages, which allows teachers to know who is in the class (enrollments) and which keeps track of grading and status in the school. That is a completely separate operation from writing content. Creating interactive learning nodes, such as quizzes or step-by-step tutorials is a third area whose mechanism is separate but whose application is connected from each class exercise.

"Getting Across"

"Getting Across" is a stage term which means you have an audience which is not just watching you, but engaged with you and supporting you. Kansas City's Ronald and Lonnie McFadden have ben performing since they were kids with their father. They tap, play horn and sing. In an interview by Billie Mahoney on her show, "Dance On" (a show I shot and edited - see my 1hr 14 min sampler here: http://www.mikestrongphoto.com/CV_Galleries/VideoEmbed_DanceOn.htm), Lonnie talks about a time when the pair were performing in Japan. They hadn't tapped in years. Their audience were appreciative but the brothers felt they still needed something else to connect. So, they decided to start tapping again.

When they brought their taps to the club and started tapping, "that," said Lonnie, is when they "got across" to the Japanese audiences. Suddenly the appreciative audiences were right there with them. They got across. It wasn't the tap tech, so to speak, but their performance in tap. Here are two URLs to National Tap Dance Day performances shot and edited by me. In both cases Billie produced the shows:

1) at The GEM 2001: https://www.youtube.com/watch?v=wmyOgBGzQLQ

2) at the Uptown Arts Bar (now closed) 2014: https://www.mikestrongphoto.com/CV_Galleries/VideoEmbed_NationalTapDance2014.htm This one uses three stationary cameras I set up so I could take stills, That's me in the red shirt and blue jeans, seated and moving, DSLR to my eye. Each still picture is flashed on the video for several seconds, at the point I shot itBack to the idea of getting across content effectively. Once upon a time, such as when I started in 1967, it was thought that writers couldn't shoot and shooters couldn't write. The beliefs about human ability went along with any limits in a belief in categories. This was at the same time that a performer who could act, sing and dance was considered a triple threat. It was also the same time that I was trained in both photography and writing. Good thing too. Too often when looking for a photographer job, it was already occupied, sometimes just hired for (i.e. The Geneva Times) or they were a radio station and didn't need a photographer (different today when radio stations have websites), but they had a writing job as a reporter. So I did both at a time when combining job tasks was unusual. Today most reporters and even photographers are usually expected to do both jobs. Call it a multi-job.

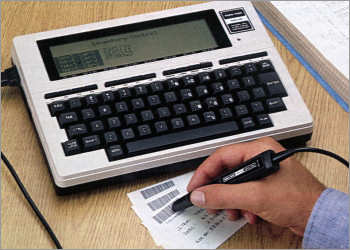

My multi-job equipment consisted of a manual typewriter (a Royal, later an IBM selectric with an OCR ball after they bought a $34,000 scanner, which would be less than $100 today, to replace a human typesetter), a package of newsprint cut down to 8.5x11-inch paper, a large pair of shears, a large can of rubber cement to paste the newsprint pages into a long roll carrying the full story, my own cameras (35mm, medium format Mamiya C3 [twin-lens reflex] and a 4x5 Crown Graphic, my own darkroom equipment, developers, enlargers and a police scanner.

We didn't have the internet then, so the transport for the story was not via email or other upload, the Geneva Times hired a local, as a courier, from the area that I covered (South part of Seneca County, New York) who worked in Geneva, New York to come by my place each morning about 5 am and pickup my stories, leaving the envelope with my stories and any negatives and/or prints I had processed at the newspaper before the courier continued on to work. News outside our areas came to us via teletype constantly.

It was on The Geneva Times (now the Fingerlakes Times) that I learned

1) Memory is re-created and modified on retrieval (not retrieved like computer data). People with initially different memories than me of a meeting we were both at would read my story in The Geneva Times the next day and agree with my account. That gave me a reputation in the area for accuracy and fairness. A local resident who was another reporter informed me of this reputation, which was good because I wasn't too sure how I was viewed, being a reporter for the local daily newspaper.

2) Deciding to make that reputation even better, I rolled out the tape recorder I had used on WGVA. I thought the audio recordings would make things easier. I found out that handwriting notes was so much better than a recorder because

[a] hand writing engaged my brain to keep summarizing what was just spoken giving me a headstart on typing out the story and

[b] by the time I was done I had several different meetings: the one I was in, the one I remembered going home, the one I heard on the tape, the one I read from the notes, the one I typed up and the one that was printed in the paper with which the readers agreed.

[c] now I could add those memories of those stories I remember is yet another version. Even with a recording, I learned, there are few fixed "facts."When I worked on radio we would pull the stories off "the wire" at regular intervals. Usually we took time to read the stories first but once in a while it was "rip and read" which meant a cold read, risking stumbles on words not expected. The Geneva Times printed and distributed its daily (Monday though Saturday) edition via trucks. The pages were paper pages with text and pictures. And often the local radio stations would read our stories, including mine, on the air. Such was the multitude of media we had. Opening the wide pages of paper was its own, physical experience which merged into a mental experience of reading content. Today's media is also an experience concentrated in devices if not in content, from which the device continually distracts us. There is something about paper which allows immersion in the material contents, something devices do not allow.

That was the mid-1970s and the still new word "multimedia" meant a set of slide projectors, maybe a 16mm movie projector and a few lights. Mostly the workings for a stage show. The first internet services, as portals, such as Compuserve, AOL and GENIE in the 1980s was the real start of mixing media to deliver story and other narrative information. The world wide web, in the 1990's (started in 1989) really deployed multiple media in the delivery system. Essentially the web page was now the show and the meaning of "multimedia" shifted to the main purpose of web pages, providing information, rather than a stage or event show.

Narrative

Start with the idea of a story, call it journalism or entertainment or teaching or just living. The story / narrative comes first if you want to retain attention for any length of time. What do you need to get across to someone? What do you need to accomplish that task? So we have text as the most basic element, perhaps supported by images and maybe video or animations and published on electronic media. HTML pages are really the same thing as any previous pages on paper, such as magazines, books and newspapers. The "ML" in HTML stands for "markup language."

Anyone who worked before SGML code or HTML code remembers using pen or pencil to mark up a typed page or a galley proof with spelling corrections, font designations, paragraph breaks and adhering to "style books" for the publication as well as the widely used the Associated Press (AP) style book. If you've ever worked on a publication with letter press printing and type set with a Linotype (and its wonderful click-clanking sounds and molten lead container on the side) you remember lines of type, set to a line height and with thin sheets of lead used to add space between those lines of cast-lead type. It was called "leading" and you can still set "leading" electronically to increase line spacing, but with a very different method.

Whoever controls the narrative controls the world

Do not imagine for a second that social media on a large scale exists for the benefit of the people. Or that you are not being surveilled. Censoring social media, regardless of how repulsive the censored source is, means narrative control. Forget trenchcoats and shady phone tappers sneaking into building basements. Now people jump at the chance to acquire personal surveillance. How many NPR stations tell their listeners to "tell your smart speaker to tune to (their station)."

Facts, Sacred Facts and Methods

- Validate your sources, how well you know them.

- BS detector - a.k.a. experience and disillusionment.

- Does the claim make sense?

- Is there evidence presented or is it just "something" presented?

- Does this have a familiar ring?

- Is this instantly jumped on and honored by mainstream journalists, if so back off and look again.

- If this is a "cannonical" narrative, look at it again, freshly. Solidly established narrative claims are often fables.

- Look again at how we accept most government pronouncements. No checking. That is a problem for journalism and for any ideas of democracy.

- ... and further skeptical questions ...

There are a couple of basic principals common to all questions raised by journalism, which are needed to get around the usual gaslighting:

- Follow the Money - a search for motive as based in money. Usually this will work to direct your research. This is also where most journalism course leave it.

- Qui Bono? - Latin for "Who Benefits?" - almost the same as above but not necessarily about money, usually about power or power relationships or ideas of who should rule and who should serve.

- What is the oppression and what are the divisions? - This usually comes up for people in revolt, reacting to mistreatment and victimization, often built up over a long time.

- And there is always no rationale at all, just plain mean, hateful, spite, vindictiveness, rage and (add a few yourself here).

- Finally, understanding power vectors, George Carlin's superb analysis of power: "It's a big club and you ain't in it. You and I are not in the big club." (i.e. who is in and who is not in "the club")

A Rant and a "Leak" - personal example

“Leaks” are not well understood. They are often official dish or trial balloons or just a back-alley way of getting at rivals or all the “off the record,” “backgrounder,” and “from a high official” or “high official said,” attributions. And the general public seems to think leakers committed an official betrayal of trust or a crime. In the "main stream" narrative these leakers are such people as "Deep Throat," Daniel Ellsberg, Chelsea Manning, Katherine Gun, Julian Assange (see below), Jeffrey Sterling, and so forth. Those are real leakers, alright, and true heroes, people with ideals. They are only the most public and are in the small percentages. More often officials want to feed you their information so that you publish it as your story. Often they do a story version of double dipping by getting interviewed about the material they leaked.

In the mid-1970s, as an area reporter for The Geneva Times (now the Fingerlakes Times) I was covering a county assembly meeting for a vacationing reporter when the republican county attorney got on the podium to point me out to the assemblage, to excoriate me and my reporting. It was a surprise but didn't bother me. I figured John saw a chance to blast away at me in front of his buddies and took it.

The next Tuesday I got a phone call from his secretary asking me to come by. They had something for me. I assumed it was some sort of complaint. Instead, John’s secretary handed me a large, sealed manila envelope. It was filled with documents aimed at discrediting a political rival, a Democratic judge, who had been elected a year or year and a half prior, in 1974, a campaign in which Republicans smeared him. It was a pretty dirty campaign for those times. Mild compared to 2020. Clearly they weren't giving up.

In openly tearing into me at the county assembly meeting, basically a bit of theater, John gave himself cover to avoid being suspected of being the source for the information he handed me a couple of days later. I headed for my editor and we perused the material. It was Chris’s beat and I was just filling in, so we handed it to Chris for his story material, when he returned from vacation. I don't remember what happened to it after.

So that was how I learned the real nature of many "leaks" and "authoritative sources." Add terms such as "off the record," "backgrounder," "access," "from a high official" and so forth.Cozy-sounding terms which really mean the reporter is getting government or office-holder propaganda (agenda) passed on as verified, substantial information when it is really just a handout and should be checked with real shoe leather. It is a back-alley route to controlling the narrative by "infowashing" it through reporters who pass it on as a "scoop" (a term we used to use but I haven't seen it in years).

Reasons for classifying information (hint: most are excuses to avoid oversight)

Lima Site 85 in Laos, a "Combat Skyspot" operation (LS-85) started November 1967, massacred March 11, 1968, declassified in 1998.

Links: The CIA link is longer and more anodyne, but has added detail about previous operations at the location.

1 - https://en.wikipedia.org/wiki/Battle_of_Lima_Site_85

2 - https://www.cia.gov/library/center-for-the-study-of-intelligence/csi-publications/csi-studies/studies/95unclass/Linder.html

3 - https://en.wikipedia.org/wiki/Lima_Site_85The very name "Skyspot" was secret when I was in the Air Force. Skyspot directed munitions and bombs over North Vietnam. LS-85 was in a far northeast corner of Laos, poking directly into North Vietnam. It lasted from November 1967 through March 10/11, 1968, when it was overrun by the North Vietnamese. The location was chosen for installation of "command guidance" radar for bombing North Vietnam, because it was already a CIA base with Hmoung support troops and because it was very close to North Vietnam, too close. It was also a darn stupid idea. A little like putting the guns of Navarone on an island just outside London and expecting a "secret" classification to keep the Brits from seeing the guns. In this case the guidance radar was located this close to the north to increase the accuracy of the bombings.

But it was also darn obvious. A lot of people were killed. Had this not been secret it is possible (though hardly certain) that someone with common sense would have called a halt. CMSgt Richard Etchberger was killed on evacuation by an AK-47 round through the bottom of the chopper, into his back. He died in the air on the helicopter, on the way back to base. His family was told he was killed in a helicopter accident and they didn't learn the true nature for 13 years. It would be later yet, in 2010 that Dick Etchberger was awarded (posthumously) the Medal of Honor. I'm sure his family would have prefered him alive, without the medal.

I learned about it earlier because I wound up in the same squadron as some of the people on that job. I didn't enlist until 7 November 1968, a year later. I was trained as a geodetic computer, one of the occupations used for Skyspot ballistic calculations for bomb drops, and later, cross-trained as a geodetic surveyor, later working with some of the surveyors who initially set up LS-85 in November 1967 and who returned to our squadron before the site started to be hammered by the North Vietnamese.

This was also secret because a lot of rules were being broken, including a prohibition on troops in Laos, so our guys went to the site in civvies with fake ID claiming they were working for a civilian company. The CIA had been running this operation in violation of Laotion diplomatic restrictions for several years. So a lot of people were there who were never there. Because it was classified it was wide open for abuse and for bad planning without oversite. Classification as secret is usually justified as protecting lives. By avoiding oversight or criticism, that secrecy cost lives.

Something that didn't cost lives, but exposed crimes took lives, was wikileaks. See below.

The Exodus - using comparative literary analysis to assess an author's accuracy